Open-source 3D video based behavioral analysis system for neuroscience

Subscribe to receive news and updates for 3DTracker-FAB here.

Looking for more open-source tools for neuroscience? Search in Open Ephys and OpenBehavior

Project

The goal of this open-source project is to facilitate applications of 3D video analysis, by developing and sharing an open-source, low cost and versatile 3D video analysis system, named 3DTracker-FAB. The system is based on a system developed previously in Nishijo lab, which can analyze behavior of rodents and monkeys in 3D, without attaching any marker on the animals. The software will be free and the total cost for devices for the system will be < 3,000 dollars. We are also preparing for rich documentations and online discussion forum for facilitating improvements and applications.

We are calling for contributors (both of users and developers) to achieve the goal!

Why 3D?

Three-dimensional video based behavioral analyses greatly contribute to various experiments and analyses, which have been difficult in 2D video-based analyses. For example,

- Robust tracking of overlapped animals (e.g., social interaction)

- Estimation of detailed 3D postures

Why markerless?

Markers (or paint) on an animal’s body often disturbs naturalistic behavior expression. By making the system markerless, we can analyze: emotional postures, social interaction, and any behavioral tests which don’t allow long habituation/training for the markers.

How it works

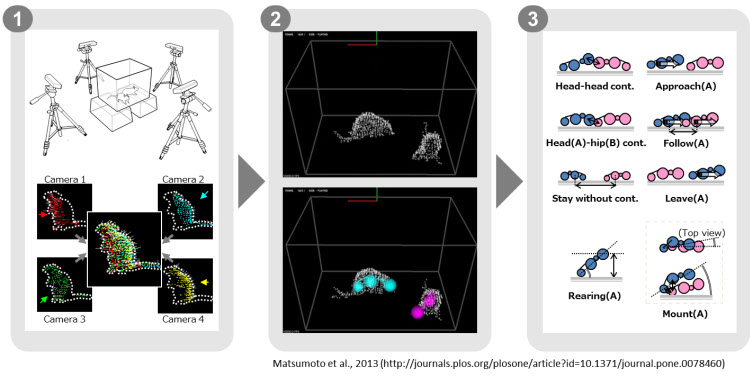

- A 3D video is reconstructed from the images of multiple (~4) depth cameras.

- Positions of body parts are estimated by fitting skeletal models to the 3D videos.

- Various behaviors are automatically detected based on the trajectories of body parts.

Publications

- Matsumoto, J., Nishimaru, H., Ono, T., & Nishijo, H. (2017). 3D-video-based computerized behavioral analysis for in vivo neuropharmacology and neurophysiology in rodents. In In Vivo Neuropharmacology and Neurophysiology (pp. 89-105). Humana Press, New York, NY [Springer].

- Nakamura T, Matsumoto J, Nishimaru H, Bretas R, Takamura Y, Hori E, Ono T, Nishijo H. (2016) A markerless 3D computerized motion capture system incorporating a skeleton model for monkeys. PLoS One 11:e0166154 [PubMed]

- Matsumoto J, Nishimaru H, Takamura Y, Urakawa S, Ono T, Nishijo H. (2016) Amygdalar auditory neurons contribute to self-other distinction during ultrasonic social vocalization in rats. Front Neurosci 10:399 [PubMed]

- Matsumoto J, Uehara T, Urakawa S, Takamura Y, Sumiyoshi T, Suzuki M, Ono T, Nishijo H. (2014) 3D video analysis of the novel object recognition test in rats. Behav Brain Res 272:16-24 [PubMed]

- Matsumoto J, Urakawa S, Takamura Y, Malcher-Lopes R, Hori E, Tomaz C, Ono T, and Nishijo H (2013) A 3D-video-based computerized analysis of social and sexual interactions in rats. PLoS One 8:e7846 [PubMed]